-

Please try to be as accurate as possible with your search.

-

We can quote you on 1000s of specialist parts, even if they are not listed on our website.

-

We can't find any results for ŌĆ£ŌĆØ.

Summary: Redundant control and power systems turn equipment failures into non-events, so hospitals, water plants, data centers, and transport networks keep running even when something critical breaks.

In missionŌĆæcritical sites, a ŌĆ£momentary outageŌĆØ is not a nuisance; it is a safety and reputational event. Studies cited by Maverick Power and Giva show that an hour of downtime can easily exceed $100,000.00, with many incidents reaching $1ŌĆō5 million.

For hospitals, control rooms, and utilities, the more serious cost is loss of lifeŌĆæsafety functions: dark operating rooms, silent pumps, or frozen SCADA screens when operators need them most. EIS Council and CISA both frame redundancy as a core resilience strategy, not a luxury.

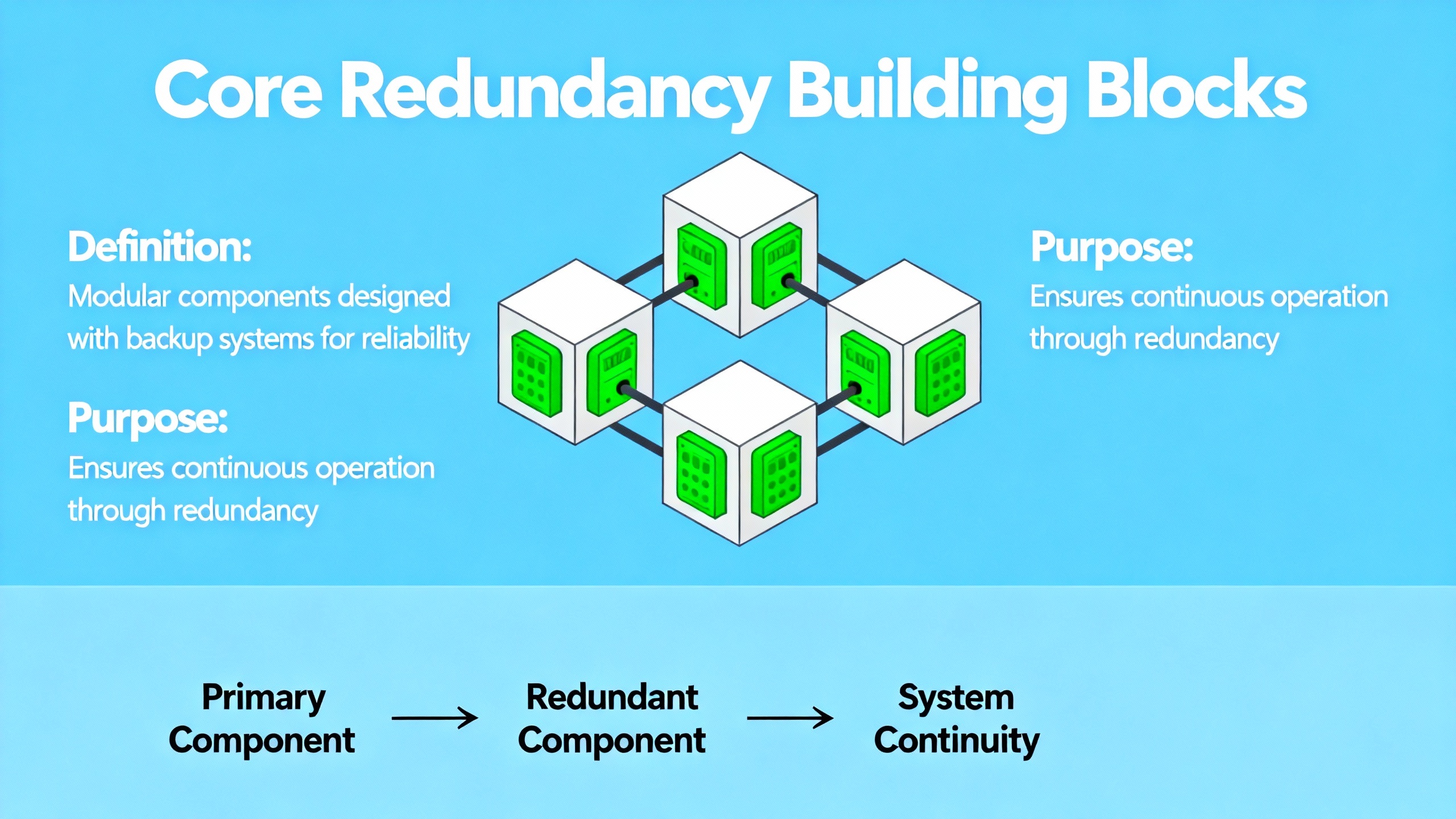

From a power-systems perspective, this means treating UPS, inverters, ATS, PLCs, and networks as one chain. If any link remains a single point of failureŌĆöwhether it is a breaker, a controller, or a network switchŌĆöthe whole chain is still fragile.

In field work on data centers, water plants, and industrial campuses, three layers consistently determine whether a facility rides through a fault or not.

Vendors like Rockwell (ControlLogix) support fully redundant chassis with dedicated sync modules. Vertech rightly reminds us that you are not just buying a second CPU; you are buying extra racks, power supplies, communications, and engineering hoursŌĆöand you still have to address I/O and code failures.

CISAŌĆÖs emergency communications guidance adds a second, crucial point: your routing and radio/dispatch infrastructure also needs backup paths and power, or the best PLC redundancy will not matter during a regional event.

Not all redundancy is equal. Data from Maverick Power and Vaultas, along with Microsoft Azure and CycleŌĆÖs design guidance, show that the pattern matters as much as the hardware count.

Commonly useful configurations:

N+1: One extra UPS, rectifier, or cooling unit beyond what is required. A strong baseline for most plants and control rooms.2N: Two fully independent power and control paths that can each carry the full critical loadŌĆöoften justified for Tier III/IVŌĆæstyle data centers and large hospitals.A nuance: cloudŌĆæstyle multiŌĆæregion patterns described by Cycle and Microsoft do not map oneŌĆæforŌĆæone to plantŌĆæfloor controls, but the underlying principle of independent failure domains still applies. Separate controller racks, physically separated cable routes, and independent UPS feeds are the plant equivalent of ŌĆ£different zones or regions.ŌĆØ

JD SolomonŌĆÖs ŌĆ£Four HorsemenŌĆØ framework is a useful sanity check: as you add redundancy, watch complexity, independence, failure propagation, and human error. Two identical units on the same bus, in the same cabinet, fed from the same breaker, are not independent redundancy.

Even wellŌĆædesigned redundancy fails if it is never tested or maintained.

Based on experience and guidance from ACE, ISA, JD Solomon, and Apps Associates, I recommend treating redundant power and control as a living system with clear operating practices:

If one hour of outage costs $100,000.00 and a robust N+1 / 2N architecture plus testing program costs $250,000.00 more than a bareŌĆæbones design, two avoided incidents over the life of the system pay for the entire investment.

For critical infrastructure, that is usually the easiest business case in the facility.

Leave Your Comment